Discovered: Botnet Costing Display Advertisers over Six Million Dollars per Month

| Chameleon Botnet |

|---|

| Date of discovery: 28 February, 2013 |

| Known as: Chameleon Botnet |

| Discovered by: spider.io |

| Activity identified: Botnet emulates human visitors on select websites causing billions of display ad impressions to be served to the botnet. |

| Number of host machines: over 120,000 have been discovered so far |

| Geolocation of host machines: US residential IP addresses |

| Reported User Agent of the bots: Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0) and Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; WOW64; Trident/5.0) |

| Proportion of traffic that is botnet traffic from IP addresses of host machines: 90% (diluted by gateway IPs) |

| Number of target websites across which the botnet operates: at least 202 |

| Proportion of traffic across the target websites that is botnet traffic: at least 65% |

| Number of ad impressions served to the botnet per month: at least 9 billion |

| Number of distinct ad-exchange cookies associated with the botnet per month: at least 7 million |

| Average click-through rate generated by the botnet: 0.02% |

| Average mouse-movement rate generated by the botnet: 11% |

| Average CPM paid by advertisers for ad impressions served to the botnet: $0.69 CPM |

| Monthly cost to advertisers of ad impressions served to the botnet: at least $6.2 million |

Introduction

In this disclosure post we report the discovery of the Chameleon botnet by spider.io. This discovery of the Chameleon botnet follows the recent take-down announcements of the Bamital botnet by Microsoft and Symantec—on February 6th of this year. Both the Chameleon botnet and the Bamital botnet have cost online advertisers millions of dollars. The Chameleon botnet is notable for the size of its financial impact: at a cost to advertisers of over 6 million dollars per month, it is at least 70 times more costly than the Bamital botnet. However, the Chameleon botnet is arguably even more notable for the fact that it is the first botnet found to be impacting display advertisers at scale (as opposed to text-link advertisers).

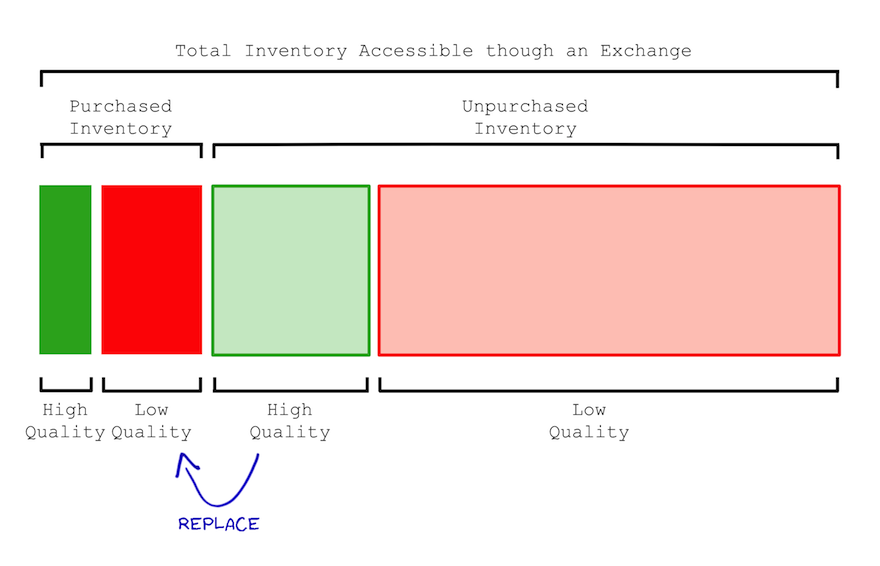

Display advertisers use algorithms with varying degrees of complexity to target their advertising at the most appropriate website visitors. These algorithms involve continually measuring websites and their visitors to determine engagement levels with website content and with ad creatives. For the Chameleon botnet to evade detection and to impact display advertisers to the extent that it has requires a surprising level sophistication.

spider.io has been tracking anomalous behaviour associated with Chameleon botnet since December, 2012. In February of this year the extent of the Chameleon botnet’s principal web-browsing activity was established. This was achieved as part of spider.io’s broader work with leading display ad exchanges and demand-side platforms to identify deviant consumption of display advertising media. In particular, DataXu and media6degrees have been proactive partners.

Infection

Individual bots within the Chameleon botnet run on host machines with Microsoft Windows as the operating system. Bots access the Web through a Flash-enabled Trident-based browser that executes JavaScript.

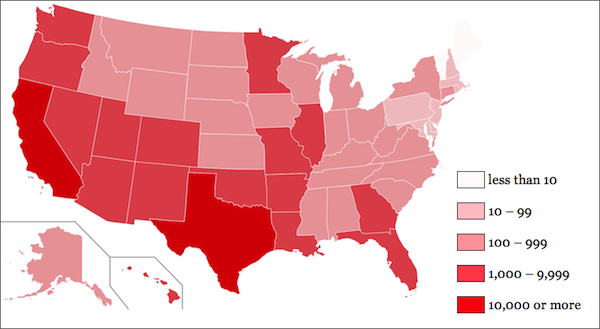

More than 120,000 host machines have been identified so far. 95% of these machines access the Web from residential US IP addresses. The geographic distribution of these US IP addresses is shown below.

Functionality

spider.io has observed the Chameleon botnet targeting a cluster of at least 202 websites. 14 billion ad impressions are served across these 202 websites per month. The botnet accounts for at least 9 billion of these ad impressions. At least 7 million distinct ad-exchange cookies are associated with the botnet per month. Advertisers are currently paying $0.69 CPM on average to serve display ad impressions to the botnet.

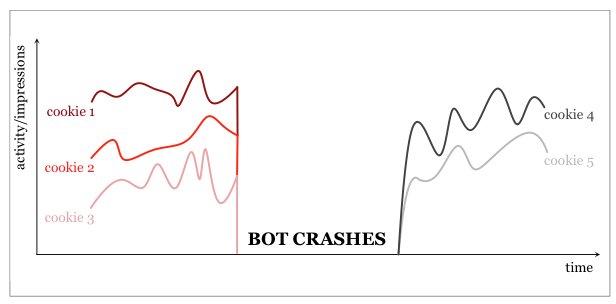

The bots subject host machines to heavy load, and the bots appear to crash and restart regularly. The bots largely restrict themselves to the 202 target websites. Each bot often masquerades as several concurrent website visitors, each visiting multiple pages across multiple websites. When a bot crashes the concurrent sessions end abruptly; upon restart the bot requests a new set of cookies. These crashes and idiosyncratic site-traversal patterns are just two of the many bot features that provide for a distinctive bot signature.

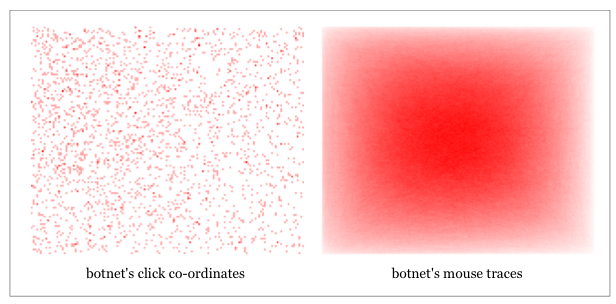

Chameleon is a sophisticated botnet. Individual bots run Flash and execute JavaScript. Bots generate click traces indicative of normal users. Bots also generate client-side events indicative of normal user engagement. They click on ad impressions with an average click-through rate of 0.02%; and they surprisingly generate mouse traces across 11% of ad impressions.

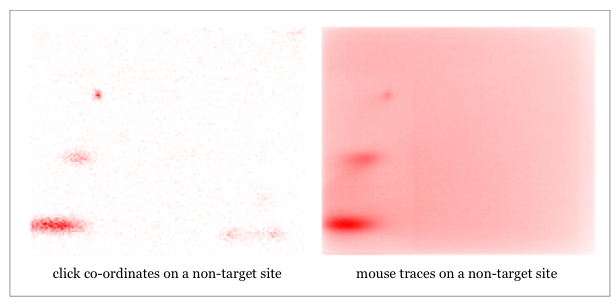

Despite the sophistication of each individual bot at the micro level, the traffic generated by the botnet in aggregate is highly homogenous. All the bot browsers report themselves as being Internet Explorer 9.0 running on Windows 7. The bots visit the same set of websites, with little variation. The bots generate uniformly random click co-ordinates across ad impressions and the bots also generate randomised mouse traces. As an illustration, the botnet’s click co-ordinates and mouse traces across 300×250-pixel ad impressions are shown below. By way of contrast, we also show click co-ordinates and mouse traces which were recorded across 300×250-pixel ad impressions on a website outside of the botnet’s target cluster of websites. Calls to action within ad creatives and the position of ads relative to webpage content create organic skews and hotspots not present across botnet traffic.

Protection

A blacklist of 5,000 IP addresses of the worst bots within the Chameleon botnet may be found here.