Extreme Architecting

As Simon covered in our first blog post, our quest is to detect web bots that are up to no good. Of course, this includes bots pretending to be real people, not just those with bot user agents! Otherwise it would be pretty easy, wouldn’t it?

To help our customers, we need to receive and analyse requests from their websites, making a real-time judgement as to the nature and intentions of an “actor” (a person or bot making requests to the website). Then we need to push our real-time classifications into an analytics dashboard/API. This would be tricky enough, if we were only analysing low levels of traffic. But, before the year is out, we’re expecting that we’ll be analysing over a billion events per day (which would be four times the number of tweets currently sent each day). Due to the volumes of traffic we process, and our customers’ mitigation requirements, we need our systems to be highly available and massively scalable.

Don’t get attached

As a startup, we have very little technological baggage, but we do also have lots of systems to build and grow very quickly. As a result, we’re constantly watching the tech landscape for new or improved ‘off-the-shelf’ (or ‘straight-from-github’) software. We have a strong preference for open source wherever possible, so we are free to make changes that we can contribute back to the community. Sometimes, this means throwing away a home-grown system in the early stages of its life, to replace it with a recently-released community alternative. We aren’t afraid to do this. Just because something looked right 3 months ago, doesn’t mean it’s still right now. It’s all too easy to get attached to the current way of doing things, which is something that must be overcome when a better solution presents itself.

The key to this ‘extreme architecting’ is to keep systems separated as far as possible, so they are freely interchangeable. The common pattern of abstraction used in software (with Java interfaces, for example) can easily be overlooked when building large but tightly-coupled computer systems that require high performance. In a stream based architecture it’s fairly easy to add components to message queuing servers, running systems in parallel to compare results and performance, before selecting the best for the job.

Storm

A recent example of this is Storm, released by Nathan Marz of Twitter. Storm is a distributed stream-processing framework, and something we’d been keeping an eye on for some time. One look at the slides showed the architecture that led to the creation of Storm was the same architecture we had internally—in fact, we’d recently started our own project to create something similar to Storm in light of our experiences. We were well aware that in the coming months our load was going to move from a few hundred requests a second to potentially tens of thousands (and more!). As a result, we needed to ensure our new system was reliable and massively scalable.

When the Storm project was open-sourced, I spent a few hours seeing how our system could make use of it. We had parts of our system converted to it in a matter of days. This was due to a combination of excellent documentation, but also that our system was configured in a similar way, so we did not have to take a great conceptual leap. Rather than spending months writing our own code, we were able to spend weeks adapting our existing systems to its framework and carry out exploratory testing work—we like to know how our systems will respond under heavy (and crushing) loads. It’s better than being surprised when something goes wrong!

We’ve been running Storm on production loads in our test environment for a couple of weeks now, and are preparing to roll out to our full production environment—one of the first companies to do so outside of Twitter. We’re really excited about the project and hope to contribute back to it. There’s a growing community already making contributions to the ecosystem, and we’re grateful to Nathan for all his hard work.

Storm – Uses

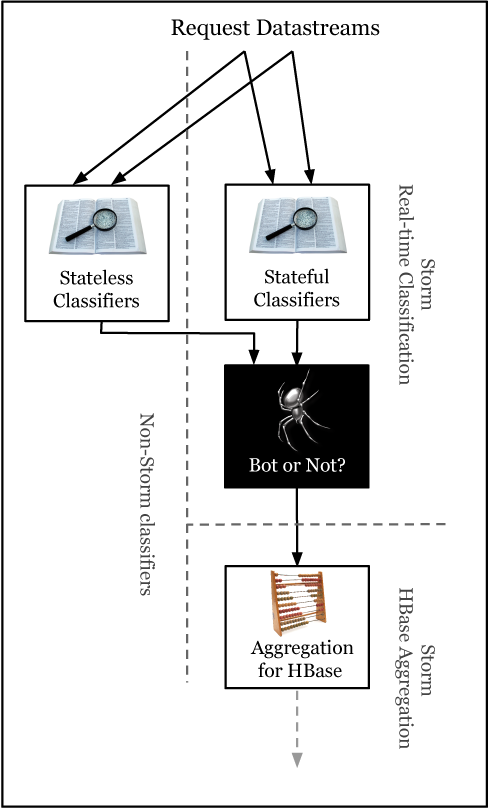

Our new Storm cluster provides the base for a several components in our system. Our stateful classifiers consume multiple streams of information about incoming requests and join them together to identify bot and user behaviours. We use the Esper stream processing engine to perform the analysis, while Storm provides the fault-tolerance and message distribution layer required to make Esper scale. We also have a set of stateless classifiers to make decisions based on single requests. These are manually scaled (and written in Python) at present, but will be moved under Storm’s management using its ‘multilang’ feature.

Storm is also finding uses within our database architecture, providing first-line aggregation to reduce the frequency of writes to the HBase cluster that runs our analytics dashboard. In time, we plan to take advantage of Storm’s distributed RPC (remote procedure call) features to enhance our customer-facing APIs.

At the cutting edge

We came to the realisation that most of our systems run “0.x” version software. With proper testing, and with a focus on ensuring graceful service degradation in the face of component failure, we don’t consider this a problem—more, a necessary part of using community software at the cutting edge of data processing. If you want to work with big data, using some technologies you’ll have heard of and some you won’t, why not have a look at our careers page?